Hi!

This portfolio is something I use to showcase work I’ve done in the past.

You’ll notice some lack of polish on this - this is due to time pressure and my expectation of a mostly-engineer audience who will focus on the technical aspects instead of the presentation. And I’m certainly not trying to show off my skill as a web designer here 😅

This is ordered by “significance of technical achievement” - the things I’m most proud of are at the top, and the honorable mentions are at the bottom :)

Monado’s hand tracking

This is by far the coolest thing I’ve worked on so far. This is a low-latency, accurate and smooth optical hand tracking system, built from scratch (not on top of Mediapipe, although I tried that too) with a completely open-source implementation and dataset.

Many considered this problem one best left to the big leagues of Ultraleap and Oculus. I thought differently, and we ended up with a really good system that basically works on any VR headset with calibrated cameras.

At Collabora I’ve done maybe 90% of the relevant work:

- Scraping, collecting, and generating training data

- Writing and wrangling machine learning training infustructure

- Writing realtime inference code in C++

- Creating a realtime nonlinear optimizer to get smooth 3D hand pose trajectories

- Writing various infrastructure to deal with camera streaming, camera calibration, distortion/undistortion, etc.

This took me about a year, right out of college. I was a pretty huge novice to computer vision, machine learning, and algorithms, but through hard work and waging holy war against impostor syndrome, we made it happen!

Full-system demo

(Twitter link, Collabora blog post)

These are first-person recordings on my custom Project North Star headset, Valve Index and Reverb G2 showing off our hand tracking. These were recorded live, nothing added in post!

Artificial dataset generator

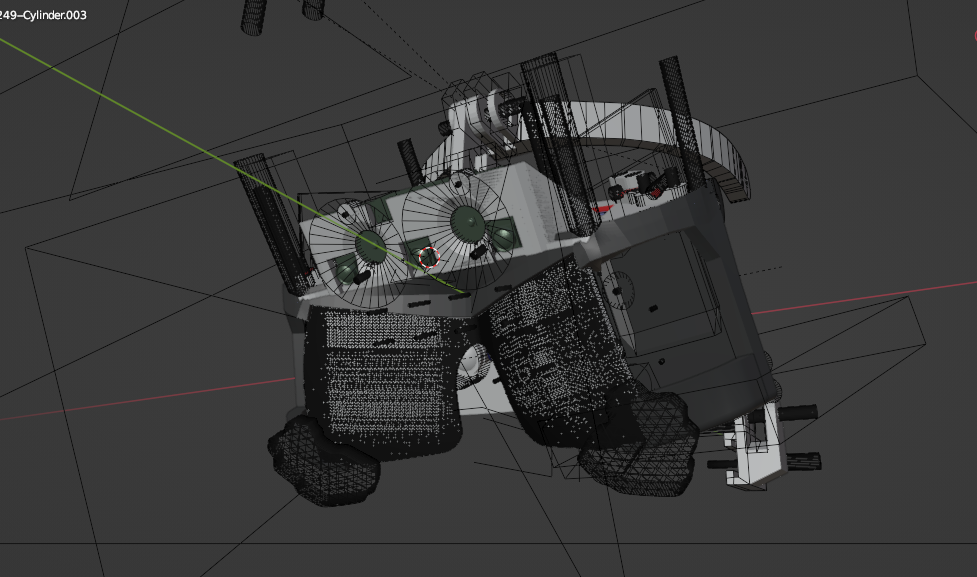

I collected various hand scans from asset stores, releasing the open-licensed ones here. I retopologized the ones that needed it and rigged all of them myself to ensure accurate deformation across the range of human hand poses.

To automate hand animation and rendering, I developed a Blender Python script that communicates with a manager process written in C++, receiving mocap data with which it animates the hand and renders annotated images.

To manage multiple Blender instances, I built a multithreaded C++ manager program that communicates with several concurrent Blender instances over sockets. It retrieves mocap data by combining randomly chosen head/wrist pose mocap data collected from Lighthouse tracking with finger pose data either collected from an earlier version of our hand tracking pipeline or procedurally generated. Then it retargets the mocap data using nonlinear optimization to fit each hand scan exactly and sends it to the Blender instance.

This work was pretty challenging, requiring me to learn a new-to-me part of Blender, a fair bit about orchestrating headless Blender instances and reusing our nonlinear optimizer in a novel way to create diverse, smooth hand trajectories.

Neural nets

Our hand tracking pipeline currently has two main ML components: the hand detector and the keypoint estimator. The hand detector is very small and doesn’t run every frame - this is intentional to reduce compute load. The keypoint estimator is a bit bigger and runs every frame in each camera view for each hand.

The keypoint estimator does a few things:

- Estimates hand landmarks as 2D heatmaps

- Estimates the distance of each hand landmark from the camera, relative to the middle-proximal joint, as a 1D heatmap

- Estimates each finger’s curl value

- Tries to predict whether or not the input image contains a hand, which we use as one of the termination conditions to stop tracking the hand under high occlusion or in the case of erroneous detections

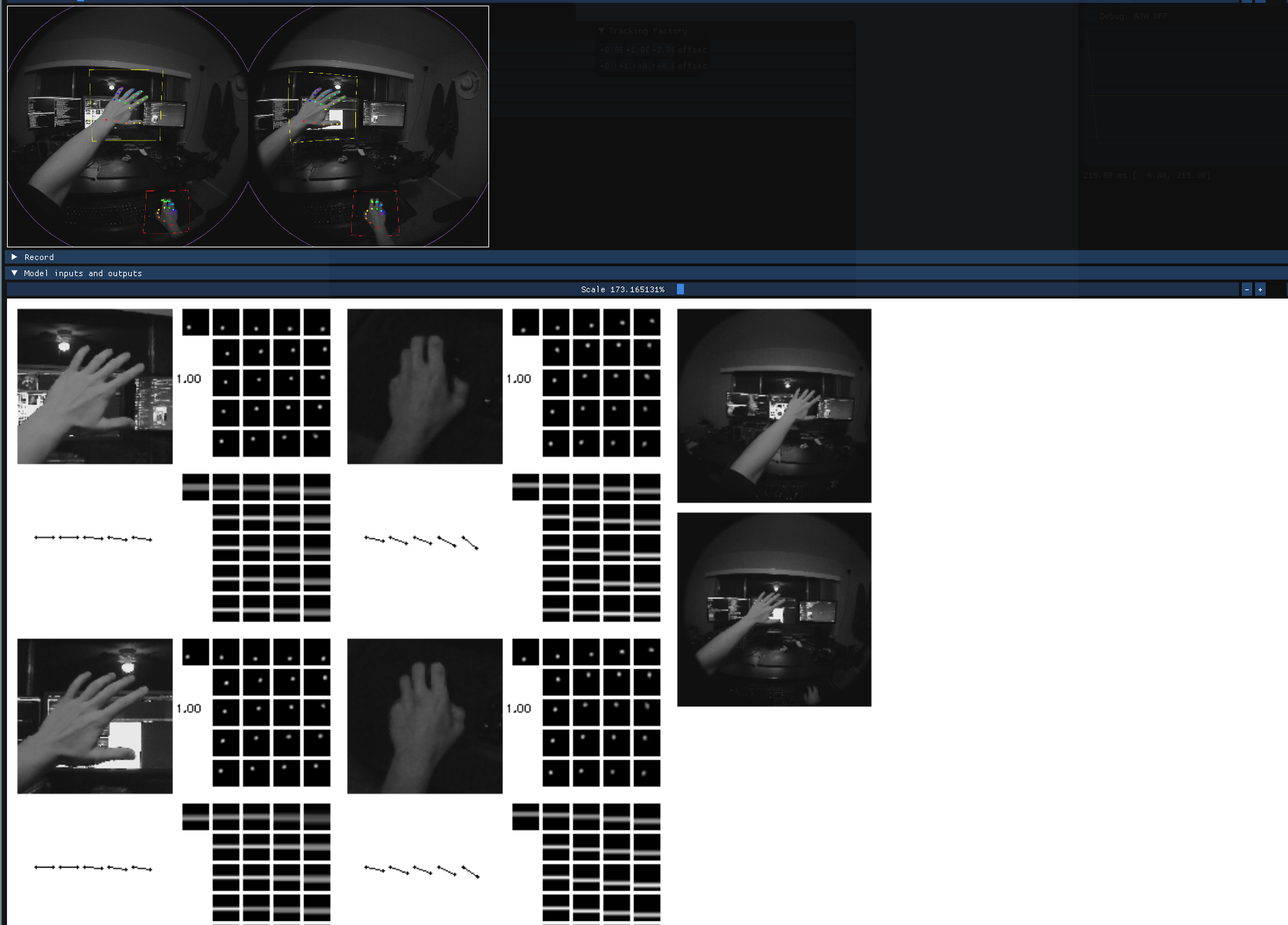

Here’s some imagery of the debug view of our optical hand tracking pipeline. The four cropped views are the input to the keypoint estimator models. The number to the right is the confidence that these are hands (you can see it’s working! the probability is one, and they’re definitely all hands!), the dots to the right are the 2D heatmaps, the lines below and to the right are the 1D heatmaps, and the curling lines at the bottom are visualization of the finger curl values.

The hand detector is simple: it takes full camera views cropped down to 160x160 and outputs a 8D vector corresponding to the center, radius and confidence of the left and right hand bounding boxes.

Both of these models were pretty heavily inspired by Facebook’s MeGATrack paper and milkcat0904’s PyTorch reimplementation of the model architectures although we’ve iterated a bit on the base model architectures by now.

Also, to be clear, we don’t have any of Facebook’s weights or dataset - we did train these from scratch! Shoutout to facebook for doing quite actually-open research (publish your datasets next pls) and milkcat0904 for their very solid reimplementation!

Nonlinear optimizer

This is what we use to turn model predictions into a smooth 6DOF hand trajectory. We’re using what I know now to be a fairly normal architecture, also inspired by Facebook’s MeGATrack paper.

The story of how we got there was pretty interesting, though.

- Late 2021: Started by implementing very simple SGD from finite differences, from scratch in C++. I did end up getting it working, but it was very slow

- Late 2021-early 2022: Read a bunch about kalman filtering, figured out that we’d definitely need something iterative - I remember Iterative Extended Kalman Filters and single constraint at a time looking somewhat promising

- Early 2022: Wrote a very weird optimizer that iteratively ran Cyclic Coordinate Descent IK on all hand joints, using basically the centerpoint of all the downstream joints as end effector/target joints, and used Umeyama within the loop to position the root joint. This worked but it was jittery and we had very little control over it

- Early-mid 2022: Realized Levenberg-Marquardt was the way to go, especially after reading FB paper. Started by using Eigen’s unsupported LM implementation with finite differences. This worked but was pretty slow. Messed around with Ceres, Enoki and sympy - ended up using Ceres’s tinysolver and packaging it here. We’re still using tinyceres, pretty happy with it!

(I really wanted to include visuals here, but then realized I don’t quite know what a nonlinear optimizer looks like. If you know how to take a picture of one, please contact me!)

Steam launch

This is still ongoing! The store page is finally live, but we have a waiting period where the page has to be on “coming soon” before we can publish binaries.

I did all the graphic design, copywriting, and filling-out-Steamworks-forms for this store page. Definitely not groundbreaking - I’ve seen way too many sunset-colored gradients in VR software branding - but somebody had to do it and I stepped up.

The store page is here and all the code is here.

Note that that codebase submodules Monado for the heavy lifting and only handles camera communication and being an OpenVR driver.

Some general other notes about Monado’s hand tracking

I did most of the work, but at the same time there’s no chance I could have done it without a few key peoples’ mentorship, ideas and friendship.

Many thanks to:

- Jakob Bornecrantz for helping with general Monado infrastructure stuff and always reviewing my code.

- Rylie Pavlik for pointing me to the right resources for learning C++, C++ template metaprogramming, and Eigen3. And for helping me learn various tracking algorithm fundamentals.

- Mateo de Mayo for several key good ideas, writing a lot of Monado’s shared HT/SLAM camera pipelining and dataset recording infrastructure, and encouraging me to try realtime nonlinear optimization.

- Marcus Edel and Jakub Piotr Cłapa for helping with the very early ML stuff and encouraging me to take the time to really learn PyTorch.

- Nikitha Garlapati and Hampton Moseley for giving me a hand with training data.

- Christoph Haag, Lubosz Sarnecki, Pete Black and all the other Monado contributors for giving me such a solid base of hardware drivers and infrastructure to build on top of.

The code is a little bit spread out, so here are some links:

- Main inference code

- Repo hosting final trained models

- Repo for data generation, annotation, model training and pipeline evaluation

- SteamVR driver

Project North Star

Designed and built a custom North Star variant

As of 2023, this is the only North Star headset that does all tracking on a single stereo pair - the more common design still uses a Ultraleap tracker paired with some off-the-shelf SLAM tracker, is heavier and has a less-comfortable headgear.

More images

I'm also very happy about this magnet snap-on mount for a through-the-lens camera. Makes demo recording very easy :)

I'm also very happy about this magnet snap-on mount for a through-the-lens camera. Makes demo recording very easy :)

For this project, I did all the design, printing, assembly and calibration. I think I iterated about 40 times (ie. updating the design, waiting 7 hours for my 3D printer to make it, reassembling it with the new part and testing the feature.) This was really fun and a big part of my 2020 and 2021!

Created a custom optical calibration method for North Star HMDs

I iterated a lot on this, picking up traditional camera calibration methods, edge detection, thresholding, marker detection, etc. along the way.

The main focus of my work here was empirical calibration, where you place a calibrated stereo camera inside of a HMD where the user’s eyes would be, draw a pattern on the display, and just save the mapping from display UV to tanangles as a mesh.

This was my very first “real” computer vision project, and I ended up trying a lot of things before I learned enough to make something that actually worked! In sequential order, this is what I remember doing:

- Canny edge detection - didn’t work well because of bloom

-

Used thresholding - drawing one line at a time on the vertical and horizontal axes, keeping track of which pixels in the camera image were above a certain threshold for that line, then for each grid point take the intesection of active pixels for the vertical and horizontal line that point corresponded to. This worked but was very unreliable and needed a lot of tuning:

-

Just drew Aruco markers on the display and recorded the average position of each corner. This was pretty reliable and is where I stopped.

I also wrote a force-directed-graph-based system of smoothing the mapping out and coming up with believable grid points for areas that were obscured. If I were doing this today I’d just use nonlinear optimization, and I’d probably use known lens geometry as a prior instead of assuming literally nothing, but hey this way did work!

The most recent code is here, and I think I have some older stuff here and here. All of this stuff was pretty game-jam-quality - I did write it, but it was a long time ago and it’s not representative of the kind of work I can do now.

Created almost all of Monado’s North Star driver and Ultraleap driver

The code is here:

- North Star driver

- Optical undistortion evaluation code for North Star

- Ultraleap driver (written before I joined Collabora)

Various other demos

xrdesktop is fun too. Needs a lot of work to be practical for day-to-day use, but it looks cool! pic.twitter.com/yMtxar766A

— moshi turner (@moshimeowshiVR) April 12, 2021

WebXR running on @mortimergoro @webkit on Linux with @MonadoXR runtime, @ultraleap_devs hand tracking and @NorthStarXR's headset! pic.twitter.com/tzGfdSbDrC

— moshi turner (@moshimeowshiVR) April 27, 2021

Fun with the Intel Realsense D435 pic.twitter.com/6i0mt9rGf0

— moshi turner (@moshimeowshiVR) August 2, 2021

Various other contributions to Monado

At Collabora, I have contributed a lot to Monado! Apparently I’m up to 122 merge requests as of March 2023! You can see them here as well as a cool graph which has deemed me the fourth-biggest contributor here.

Off the top of my head I’ve

- Written a frameserver driver for DepthAI cameras

- Added various improvements to the Vive/Index and WMR drivers, adding support for hand tracking and more config options

- Worked a ton on Monado’s device setup code

- Worked on Monado’s camera calibration and frame streaming infrastructure

- Created a hardware+undistortion driver for SimulaVR’s upcoming HMD

- Worked on Monado’s debug UI

- Worked on Monado’s internal auxiliary library and math code

- Added basic euro filtering as a Monado util

- Added a pose history util to let us correctly interpolate through past poses as a runtime

- Helped review various OpenXR extensions

StereoKit

I’ve made a lot of contributions to StereoKit over the years, using it all the time for internal tools/demos. You can see my code contributions here :D

Google Summer of Code 2022 mentor and OpenGloves

During the summer of 2022, I mentored Dan Willmott of LucidVR/OpenGloves as he added OpenGloves support to Monado.

You can see his blog post about it here and his Monado merge requests are here.

We also met up in the UK and built a pair of gloves for myself:

This project as well as his earlier work landed him an internship at Valve where he still works, which has been pretty cool to see :)

OpenComposite

I’ve contributed a few times to OpenComposite, a translation layer that lets you run OpenVR games on OpenXR runtimes without needing SteamVR in the loop. My opinions about SteamVR have changed over the years, but OC is a great tool and at least VRChat and Beat Saber work great with it.

A while ago I wrote a blog post about some of that work here. and you can see all my MRs so far here :)